Running DataHub on Cumulocity IoT Edge

This section describes how to run Cumulocity IoT DataHub on the Cumulocity IoT Edge, the local version of Cumulocity IoT.

This section describes how to run Cumulocity IoT DataHub on the Cumulocity IoT Edge, the local version of Cumulocity IoT.

The following sections will walk you through all the functionalities of Cumulocity IoT DataHub Edge in detail.

For your convenience, here is an overview of the contents:

| Section | Content |

|---|---|

| DataHub Edge overview | Get an overview of DataHub Edge |

| Setting up DataHub Edge | Set up DataHub Edge and its components |

| Working with DataHub Edge | Manage offloading pipelines and query the offloaded results |

| Operating DataHub Edge | Run administrative tasks |

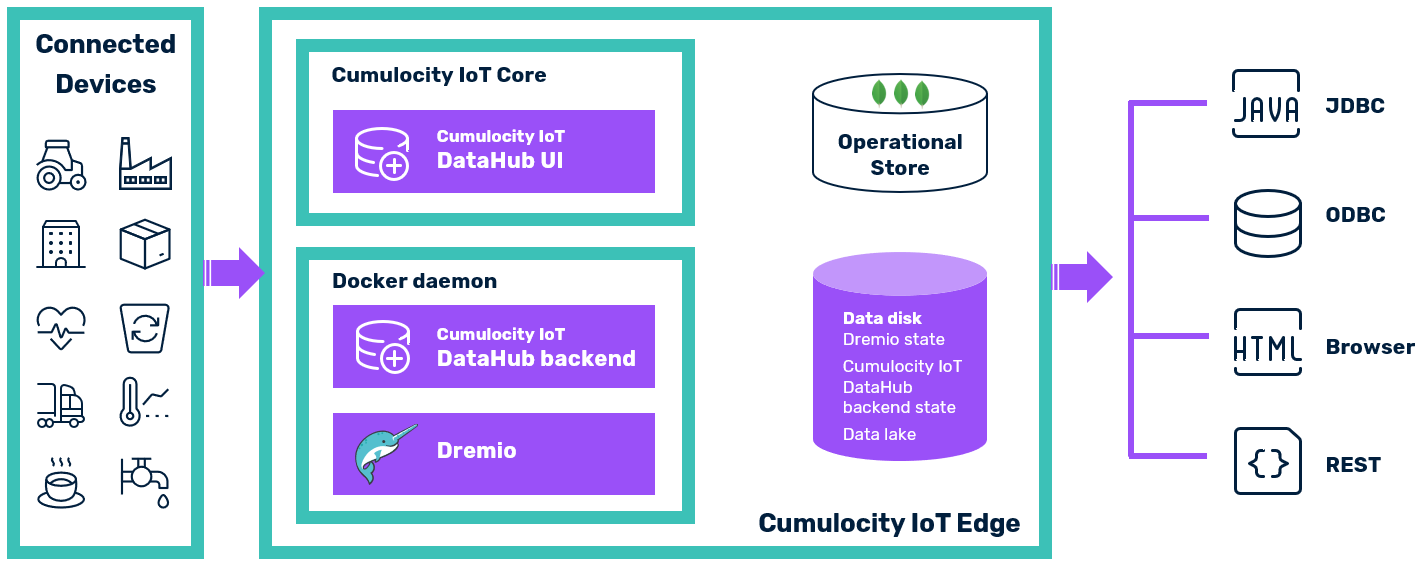

Cumulocity IoT Edge is an onsite, single-server variant of the Cumulocity IoT Core platform. It is delivered as a software appliance designed to run on industrial PCs or local servers. DataHub is available as an add-on to Cumulocity IoT Edge.

DataHub Edge offers the same functionality as the cloud-variant of DataHub, but stores the data locally. You can define offloading pipelines, which regularly move data from the Operational Store of Cumulocity IoT into a data lake. In an Edge setup, a NAS is used as data lake. Dremio, the internal engine of DataHub, can access the data lake and run analytical queries against its contents, using SQL as the query interface.

DataHub Edge consists of the following building blocks:

DataHub Edge uses the same software as DataHub, though in the following aspects these two variants differ:

| Area | DataHub Edge | DataHub |

|---|---|---|

| High Availability | Depending on the underlying virtualization technology | Depending on the cloud deployment setup |

| Vertical scalability | Yes | Yes |

| Horizontal scalability | No | Yes |

| Upgrades with no downtime | No | No |

| Root access | No | Yes, if customer is hosting |

| Installation | Offline | Online |

| Dremio cluster setup | 1 master, 1 executor | Minimum 1 master, 1 executor |

| Dremio container management | Docker daemon | Kubernetes |

| DataHub backend container management | Docker daemon | Microservice in Cumulocity IoT Core |

| Data lakes | NAS | ADLS, Azure Storage, S3, HDFS, (NAS) |

Before setting up DataHub Edge, you have to check the following prerequisites:

| Item | Details |

|---|---|

| Cumulocity IoT Edge | The local version of Cumulocity IoT is set up on a Virtual Machine (VM). See also section Setting up Cumulocity IoT Edge. |

| DataHub Edge archive | You have downloaded the archive with all installation artifacts from the Software AG Empower portal. |

| Internet access | Internet access is not required. |

The hardware requirements for running a bare Cumulocity IoT Edge instance are described in section Requirements. When DataHub Edge is additionally running, the hardware requirements of the virtual machine are as follows:

Hardware requirements for the host OS are excluded.

Copy the DataHub Edge archive to the Cumulocity IoT Edge.

scp datahub-<version>.tgz admin@<edge_ip_address>:/tmp

Log in as admin into Cumulocity IoT Edge.

ssh admin@<edge_ip_address>

Run the install script.

sudo /opt/c8y/utilities/install_signed_package.sh /tmp/datahub-<version>.tar

It takes a few minutes to complete the installation. After completion you can delete the DataHub Edge archive.

The install script runs the following basic steps:

The Docker containers will be restarted automatically if the container itself fails or the applications within are no longer reachable.

The containers are configured to store their application state on the data disk under /opt/mongodb:

Warning: You must not modify the contents of these folders as this may corrupt your installation.

The different DataHub Edge interfaces can be accessed in the same way as in a cloud deployment of DataHub.

| Interface | Description |

|---|---|

| DataHub Edge UI | The UI can be accessed in the application switcher after you have logged into the Cumulocity IoT Edge UI. Alternatively you can access it directly under http://edge_domain_name/apps/datahub-ui or https://edge_domain_name/apps/datahub-ui, depending on whether TLS/SSL is used or not. A login is required as well. |

| Dremio UI | On the DataHub Edge home page you will find a link to the Dremio UI. Alternatively you can access it directly under http://datahub.edge_domain_name or https://datahub.edge_domain_name, depending on whether TLS/SSL is used or not. You can log in as admin using password ‘datahub4edge@customer!'. |

| DataHub JDBC/ODBC | You find the connection settings for JDBC/ODBC in the DataHub Edge UI on the Home page. |

| DataHub REST API | The path of the microservice which hosts the API is https://edge_domain_name/service/datahub. |

| Dremio REST API | The Dremio URL to run REST API requests against is either http://datahub.edge_domain_name or https://datahub.edge_domain_name, depending on whether TLS/SSL is used or not. |

Info: For JDBC/ODBC you have to configure Cumulocity IoT Edge so that port 31010 can be accessed from the host system. For instructions on port forwarding see section “Setting up port forwarding” under Setting up the environment.

The definition and assignment of permissions and roles is done in the same way as in a cloud deployment. See section Defining DataHub permissions and roles for details.

The setup of the Dremio account and the data lake is done in the same way as in a cloud deployment. See section Setting up Dremio account and data lake for details.

DataHub Edge is configured to use a NAS as data lake. When configuring the NAS use as mount path /datalake. This path is mounted to /opt/mongodb/cdh-master/datalake.

DataHub Edge offers the same set of functionality as the cloud variant. See section Working with DataHub for details on configuring and monitoring offloading jobs, querying offloaded Cumulocity IoT data, and refining offloaded Cumulocity IoT data.

Similar to the cloud variant, DataHub Edge UI allows you to check system information and view audit logs. See the section on Operating DataHub for details.

When managing DataHub Edge, the following standard tasks are additionally relevant.

If problems occur, you should follow these steps:

If you still need to contact product support, include the output of the diagnostics script. See the section on Diagnostics for details of how to run it.

You can check the status of the backend in the Administration page of the DataHub UI. Alternatively you can query the isalive endpoint, which should produce an output similar to:

curl --user admin:your_password https://edge_domain_name/service/datahub/isalive

{

"timestamp" : 1582204706844,

"version" : {

"versionId" : "10.6.0.0.337",

"build" : "202002200050",

"scmRevision" : "4ddbb70bf96eb82a2f6c5e3f32c20ff206907f43"

}

}

If the backend cannot be reached, you will get an error response.

You can check the status of Dremio using the server_status endpoint:

curl http://datahub.edge_domain_name/apiv2/server_status

"OK"

Dremio is running if OK is returned. No response will be returned if it is not running or inaccessible.

The installation log file is stored at /var/log/cdh.

In order to access the logs of the DataHub and Dremio containers, you have to use the Docker logs command. To follow the logs of cdh-master you have to run:

docker logs -f cdh-master

To follow the logs of cdh-executor you have to run:

docker logs -f cdh-executor

The containers are configured to rotate log files with rotation settings of two days and a maximum file size of 10 MB.

Cumulocity IoT Edge uses Monit for management and monitoring of relevant processes. See the section on Monitoring for details. The DataHub Edge processes, namely the DataHub backend and the Dremio nodes, are also monitored by Monit.

The data disk is used for storing the state of DataHub and Dremio and serves as data lake. In order to ensure that the system can work properly, the disk must not run out of space. The main factors for the disk space allocation of DataHub Edge are the Dremio job profiles and the data lake contents.

Dremio maintains a history of job details and profiles, which can be inspected in Dremio’s job log, i.e. the “Jobs” page of the Dremio UI. This job history must be cleaned up regularly to free the resources necessary for storing it.

Dremio is configured to perform the cleanup of job results automatically without downtime. The default value for the maximum age of stored job results is seven days. To change that value, a Dremio administrator has to modify the support property jobs.max.age_in_days. The changes become effective within 24 hours or after restarting Dremio.

The data lake contents are not automatically purged, as the main purpose of DataHub is to maintain a history of data. However, if disk space is critical and cannot be freed otherwise, parts of the data lake contents need to be deleted.

Browse to the data lake folder /opt/mongodb/cdh-server/datalake. The data within the data lake is organized hierarchically. Delete the temporal folders you deem adequate to be deleted. After that you need to run the following query in Dremio:

ALTER PDS <deleted_folder_path> REFRESH METADATA FORCE UPDATE

Warning: Data being deleted from the data lake cannot be recovered anymore.

DataHub’s runtime state as well as the data lake containing offloaded data reside in the Cumulocity IoT Edge server VM. In order to back up and restore DataHub, its runtime state, and its data we recommend you to back up and recover the Cumulocity IoT Edge server VM as described in section Backup and restore.